How User Intent Prediction Improves Academic Research

User intent prediction transforms how researchers find information by analyzing search behavior, context, and patterns. This approach goes beyond simple keyword matching, addressing challenges like vocabulary mismatches and evolving research needs across multiple queries. Key takeaways:

- What It Is: Predicts what researchers are looking for by analyzing search queries, interaction history, and context.

- Why It Matters: Improves search relevance and efficiency, especially in multi-query sessions.

- Techniques Used: Machine learning, neural networks (e.g., Bi-LSTM), and clustering classify and refine search intent.

- Real-World Applications: Tools like SciNet and IntellectSeeker provide dynamic search interfaces, helping researchers refine and explore topics effectively.

- Performance Gains: Context-aware systems double task-level accuracy compared to keyword-only methods.

This shift in academic search tools makes finding relevant sources faster and more accurate, reducing the effort required for literature reviews and research discovery.

Research Studies on User Intent Prediction Methods

When it comes to predicting academic search behavior, several approaches stand out. Methods like feature extraction, neural networks, and clustering techniques have proven effective in understanding how researchers search for information.

Machine Learning Feature Extraction

Machine learning models break down user intent by analyzing aspects such as content, structure, and sentiment. Interestingly, structural features often play a leading role in boosting prediction accuracy. Researchers from the University of Massachusetts Amherst highlighted this point:

We find that structural features contribute most to the prediction performance [in information-seeking conversations].

Some systems go beyond just analyzing search queries. For instance, they incorporate pre-search context - such as related emails or previous articles - to identify what sparked the researcher’s interest. A notable example is the SciNet system, which uses fast online regression to estimate search intent in real time. This creates quick feedback loops, enabling researchers to refine their searches as they progress.

Neural Network Models for Intent Detection

Neural networks take intent prediction a step further by automatically integrating context into their analysis. Unlike traditional methods requiring manual input to define relevant features, neural classifiers automate feature integration, making them particularly effective in multi-turn research sessions where intent evolves with each query.

A standout example comes from Pennsylvania State University and the University of Illinois at Chicago. Researchers developed a Bidirectional Long Short-Term Memory (Bi-LSTM) combined with Conditional Random Fields (CRF) model to extract key phrases from scholarly documents. This model captures both long-distance dependencies in text and the relationships between labels, achieving superior performance compared to traditional supervised methods across three major scholarly datasets. Such neural approaches excel at uncovering subtle semantic patterns that simpler methods might miss.

Clustering Methods for Intent Classification

Clustering techniques add another layer of insight by grouping queries based on interaction patterns. These methods classify searches into taxonomies such as navigational (finding a specific paper), informational (exploring a topic), or transactional (downloading resources). This categorization allows search systems to tailor results to specific user needs.

In practice, clustering is particularly useful for extracting exemplar terms - concise phrases that summarize high-level topics from documents. This enhances semantic metadata extraction, especially in multi-query research sessions where researchers' needs evolve as they delve deeper into a topic. However, while clustering is excellent for categorizing broad intent types, neural classifiers often outperform it when dealing with the nuanced, long-distance dependencies found in complex academic texts.

How Intent Prediction Works in Academic Literature Search

Supporting Multi-Query Research Sessions

Academic research is rarely a one-and-done process. Researchers often refine their queries as they uncover new insights during a session. Intent prediction systems are designed to follow this evolving search behavior, making it easier to manage multi-query research. Take the SciNet system, developed by the Helsinki Institute for Information Technology, as an example. It enables users to explore 50 million articles through an intuitive radar-screen interface. Imagine searching for "3D gestures." The system might initially present broader options like "video games" or "gesture recognition." If the user selects "gesture recognition", SciNet responds by narrowing the focus to sub-topics such as "hidden Markov models" or "nearest neighbor approach." This interactive feedback loop allows researchers to fine-tune keyword prominence, striking a balance between precise results and broader exploration. It’s a practical example of how recognition often outperforms recall. This dynamic refinement process is key to delivering search results that align more closely with a researcher’s evolving focus.

Improving Topic-Specific Search Results

Intent prediction doesn’t just stop at supporting multi-query sessions - it also bridges the gap between general queries and the specialized language of academic literature. This is crucial in addressing the mismatch between everyday terms and the precise vocabulary used in scholarly writing. For instance, the IntellectSeeker platform, showcased at KSEM 2024, employs a GPT-3.5-turbo model with multiple rounds of few-shot learning to translate broad, general queries into professional academic terminology. By doing so, it significantly enhances the relevance of search results. The system doesn’t just rely on language transformation; it also integrates a probabilistic model to filter articles based on explicit user needs and observed behaviors. To make things even more user-friendly, it presents one-line summaries and word clouds, helping researchers quickly grasp the content.

A study presented at CIKM '10, published in the ACM Digital Library, emphasized the importance of query context in understanding search intent:

A query considered in isolation offers limited information about a searcher's intent. Query context that considers pre-query activity... can provide richer information about search intentions.

Measured Performance Improvements from Intent Prediction

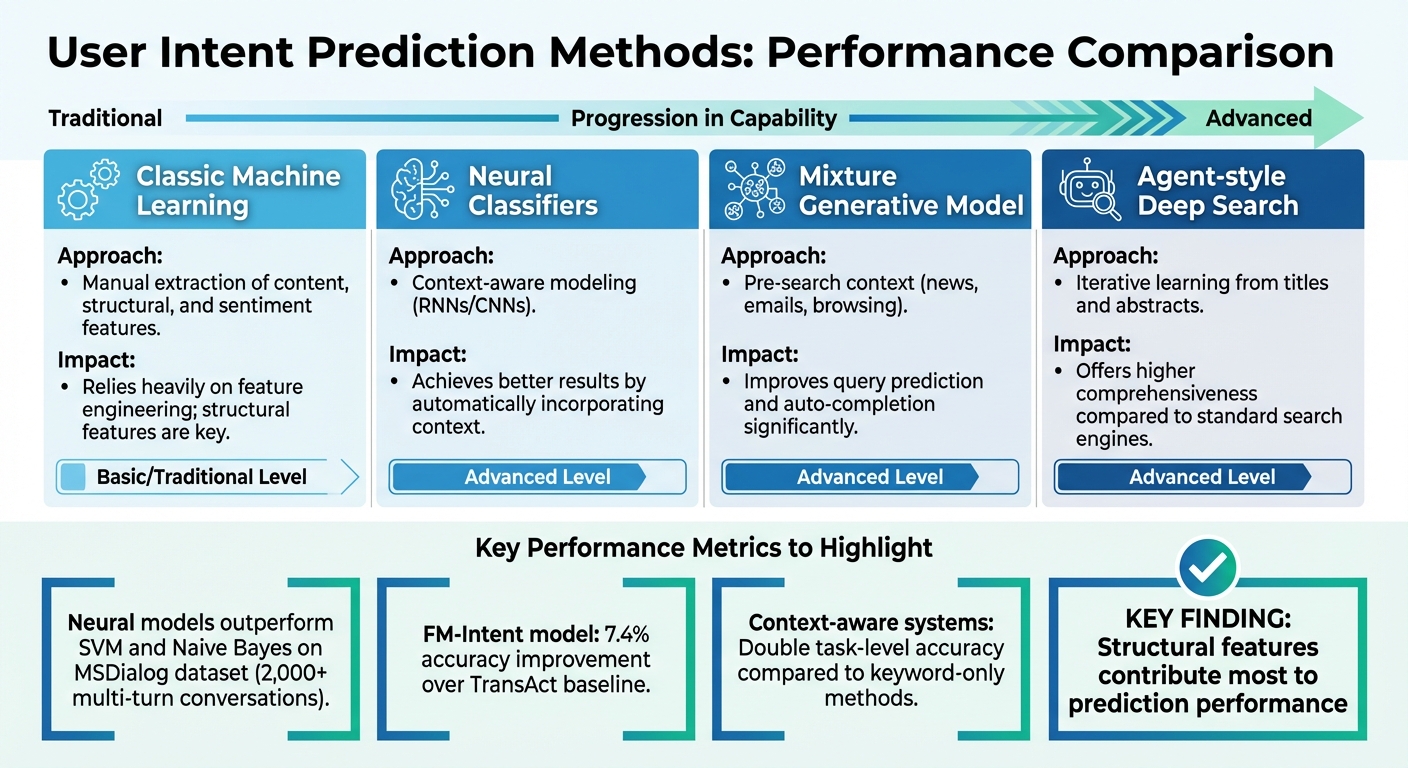

User Intent Prediction Methods Comparison: Performance and Approaches in Academic Search

Performance Comparison Across Different Methods

Neural classifiers have proven to be consistently more effective than traditional machine learning methods in predicting user intent. A study from the University of Massachusetts Amherst, featured in the ACM Digital Library, evaluated various approaches using the MSDialog dataset, which contains over 2,000 multi-turn information-seeking conversations. Neural models outshine classic methods like SVM and Naive Bayes by automatically incorporating context, whereas traditional models rely heavily on manual feature engineering.

Interestingly, structural features - such as the position of an utterance within a conversation and its length - play the most critical role in prediction accuracy, even more than content or sentiment. Chen Qu highlighted this in their research:

We find that structural features contribute most to the prediction performance.

This suggests that systems can predict user needs effectively without fully understanding every word.

Another innovative approach comes from Weize Kong's team at the University of Massachusetts Amherst. They developed a mixture generative model that considers users' pre-search context, such as reading news, checking emails, or browsing websites. Their experiments showed that incorporating this pre-search activity significantly enhances intent prediction, enabling systems to anticipate user needs based on recent behavior rather than just the immediate query.

| Method | Approach | Impact |

|---|---|---|

| Classic Machine Learning | Manual extraction of content, structural, and sentiment features | Relies heavily on feature engineering; structural features are key |

| Neural Classifiers | Context-aware modeling (RNNs/CNNs) | Achieves better results by automatically incorporating context |

| Mixture Generative Model | Pre-search context (news, emails, browsing) | Improves query prediction and auto-completion significantly |

| Agent-style Deep Search | Iterative learning from titles and abstracts | Offers higher comprehensiveness compared to standard search engines |

These findings highlight how advancements in intent prediction are reshaping the accuracy and adaptability of search systems, particularly in academic contexts where user needs evolve across multiple queries.

Testing on Academic Benchmarks

Academic benchmarks further validate the performance improvements brought by intent prediction. The MSDialog dataset has emerged as a leading benchmark for evaluating systems designed to handle multi-turn, information-seeking conversations. Unlike single-query datasets, MSDialog reflects how researchers conduct searches - through iterative sessions where each query builds on the previous one.

For example, Netflix researchers developed the "FM-Intent" hierarchical multi-task learning model, which achieved a 7.4% accuracy improvement over the TransAct baseline. This model used intent predictions to enhance next-item recommendations. While this work focused on content recommendations rather than academic search, it underscores the broad applicability of intent prediction across domains.

Another key benchmark is the TREC Session Track, which measures "whole-session relevance" rather than individual query accuracy. Using metrics like session-based Discounted Cumulative Gain, this framework evaluates how well systems support users through multiple related searches. Additionally, Undermind.ai's 2024-2025 benchmarking whitepaper compared their agent-style discovery process with Google Scholar, reporting better performance in handling verbose user prompts.

These benchmarks collectively demonstrate the measurable benefits of intent prediction, whether it's refining academic search relevance or enhancing user experience across different search scenarios.

sbb-itb-f7d34da

How Sourcely Uses Intent Prediction

Intent Prediction Features in Sourcely

Sourcely takes intent prediction to a new level by focusing on more than just keywords. It dives into the context of your research - whether you paste part of an essay or outline your research goals - to recommend academic papers, generate summaries, and create properly formatted citations that match your needs.

The platform taps into what it calls "citation knowledge", using full-text analysis instead of relying solely on metadata. This approach helps it understand the deeper meaning behind your research goals. MDPI Informatics captures this challenge perfectly:

Discovering relevant research documents from the huge corpora of digital libraries is like finding a needle in a haystack.

Sourcely's intent prediction also identifies your research interests by analyzing the content you provide. This feature addresses a common frustration: understanding why a suggested paper is relevant. Yoonjoo Lee, a lead author on recommendation systems, explains this issue:

Researchers sometimes struggle to make sense of nuanced connections between recommended papers and their own research context, as existing systems only present paper titles and abstracts.

To tackle this, Sourcely provides intent-aware summaries that clearly explain a paper's relevance to your work. This not only makes finding the right sources easier but also speeds up the entire research process.

Time and Accuracy Benefits for Users

With intent prediction, Sourcely reduces the manual effort of sifting through irrelevant sources. Its domain-specific language models ensure that recommendations are precise and tailored to your needs. Given the rapid growth of scientific literature - an estimated 8% to 9% annually, with around 389 million scholarly records available as of 2018 - this capability is a game-changer. By combining content-based filtering with user feedback, Sourcely continually refines its recommendations, making research more efficient over time.

Sourcely Pricing Options

Sourcely backs its advanced intent prediction with flexible pricing plans designed for researchers with varying needs. Here’s a breakdown:

- Free Version: Offers basic features for casual users.

- Paid Plans:

- $7 Trial: Includes 2,000 characters of processing.

- $17 Monthly or $167 Annual Plan: Unlocks full features like intent prediction, extensive source access, free PDF downloads, and customizable citation exports.

- $347 Lifetime Believer Plan: Perfect for researchers seeking lifelong access to all features.

Whether you’re a casual user or a dedicated researcher, Sourcely’s pricing options ensure there’s a plan that fits your workflow.

Conclusion

Main Points

Predicting user intent is reshaping academic research by tackling persistent challenges like the vocabulary mismatch problem. Instead of focusing solely on exact keywords, these systems interpret the underlying needs of researchers, enabling more flexible and exploratory searches that adapt to evolving research objectives.

Interactive intent modeling significantly boosts search efficiency, potentially doubling performance while helping users navigate large databases more effectively. This method takes advantage of our natural ability to identify relevant topics through visual cues, rather than relying on precise recall of search terms.

Platforms like Sourcely bring these concepts to life by providing context-aware recommendations that counteract anchoring bias - the tendency to overly rely on initial search terms. With over 13,000 publications on user intent modeling in the past decade, it's clear that the academic world values this approach.

What's Next for Intent Prediction

The future of intent prediction is moving toward even more interactive and dynamic tools. Researchers can expect visual interfaces that allow them to refine how the system interprets their needs in real time, speeding up discoveries and opening new research avenues.

Advancements in reinforcement learning are on the horizon, aiming to balance well-established findings with fresh, innovative suggestions. Another exciting development is fine-grained citation analysis, which will go beyond counting citations to examine the specific contexts in which papers are referenced - whether in methodology, results, or elsewhere. Researchers are also exploring wearable technology and augmented reality to make literature searches more seamless, even in unconventional environments.

FAQs

How does predicting user intent make academic research more efficient?

Understanding user intent can make academic research much more efficient. By identifying what researchers are searching for, it delivers highly relevant results faster. This means less time spent sorting through unrelated content and more time dedicated to analyzing the sources that matter most.

When search results are tailored to specific needs, the research process becomes both precise and personalized. AI-powered tools take this a step further by offering helpful features like advanced filtering options, reliable summaries, and quick access to downloadable resources, making the entire experience smoother and more effective.

How does predicting user intent enhance academic literature searches?

Predicting what users are looking for during academic searches depends on sophisticated tools like machine learning and natural language processing (NLP). These technologies dig into user queries, examining patterns in wording, structure, and even tone to figure out the real intent behind the search.

Take neural classifiers, for instance. They use contextual clues from queries to boost accuracy, while large language models (LLMs) provide flexible frameworks to interpret a wide range of search behaviors that are constantly changing. By studying user interactions and analyzing search logs, these models can better classify intent, making the search process quicker and more accurate.

This means students and researchers can spend less time sifting through irrelevant results and more time accessing the exact resources they need for their work.

How does user intent prediction improve academic research tools?

User intent prediction is transforming academic research tools by interpreting search queries, user behavior, and context to provide more precise and tailored results. Whether someone is searching for a specific study or diving into a broader subject, these tools can adjust their responses to better align with individual needs.

Technologies like natural language processing and machine learning play a key role here. They help these systems understand not just direct queries but also subtle user behaviors, like browsing habits. Over time, these tools learn and adapt to evolving research interests, making it faster and easier for researchers to locate relevant studies. This minimizes manual effort and simplifies the research process, boosting both efficiency and accuracy.