Best Practices for Modeling Short-Term Search Behavior

Understanding what users want during a single search session is key to improving search systems. Short-term search behavior looks at immediate actions like query changes, clicks, and time spent on results. This differs from long-term behavior, which focuses on patterns over weeks or months. Here's why this matters:

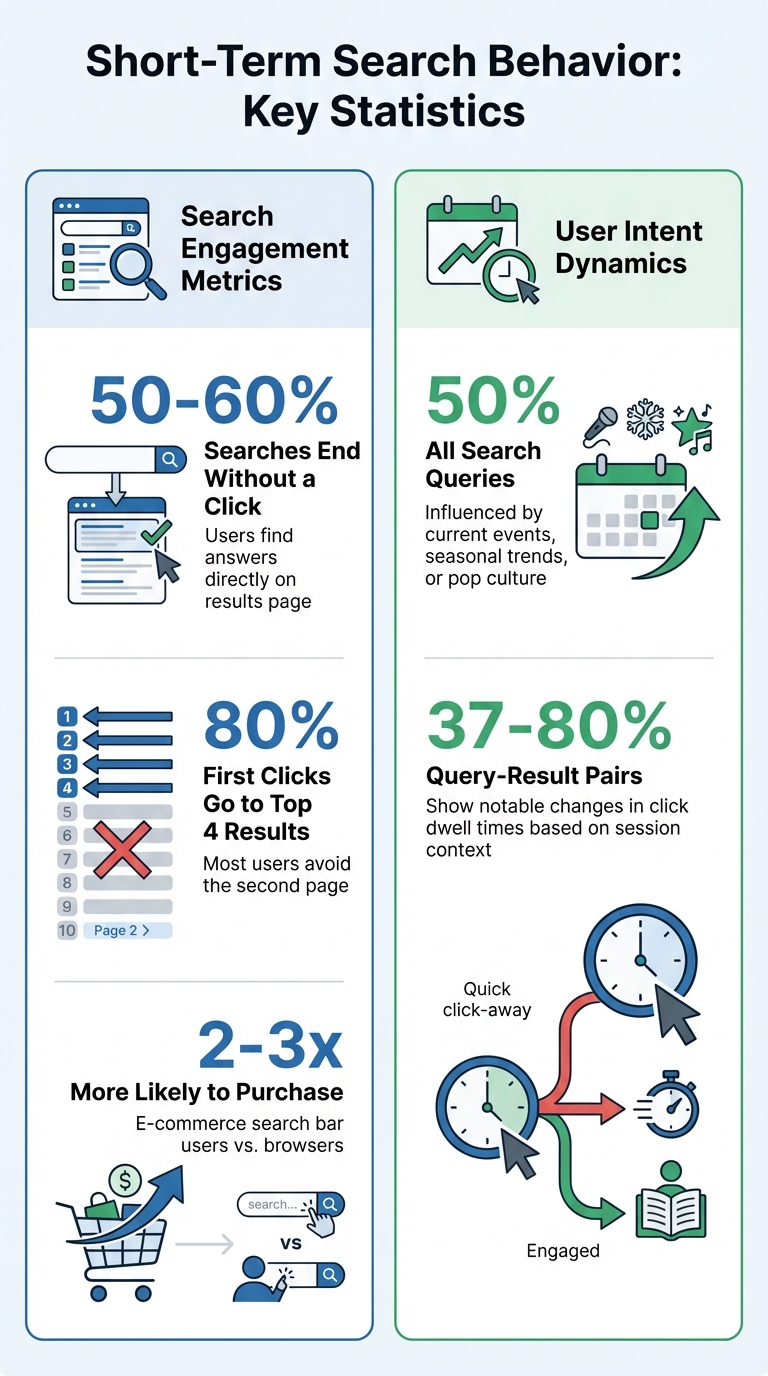

- 50-60% of searches end without a click, as users often find answers directly on the results page.

- 80% of first clicks go to the top 4 results, while most users avoid the second page.

- In e-commerce, search bar users are 2-3 times more likely to purchase than those who browse.

To improve search relevance, focus on session-level data to refine results in real-time while balancing historical trends. Challenges include handling sudden shifts in user intent (e.g., seasonal or event-driven queries) and avoiding overfitting due to noisy, short-term data. Solutions like session-based profiling, sequential prediction, and contrastive learning help systems respond effectively to evolving user needs.

Key Takeaways:

- Combine short-term and long-term data for better personalization.

- Use metrics like nDCG and Relative nDCG Drop (RnD) to measure performance over time.

- Tools like Sourcely can aid researchers in accessing the latest studies and benchmarks.

Short-term modeling isn't just about technical improvements - it directly impacts user satisfaction and business outcomes.

Key Statistics on Short-Term Search Behavior and User Engagement

Main Challenges in Modeling Short-Term Search Behavior

Modeling short-term search behavior comes with two primary hurdles: rapidly changing user intent and temporal data leakage. Tackling these issues is crucial for creating effective, session-based personalization systems. Let’s break down these challenges.

Changing User Intent Over Time

User intent can shift quickly, often influenced by current events, seasonal trends, or pop culture. Research shows that these factors account for nearly 50% of all search queries. This means the same search term can carry entirely different meanings depending on the timing and context.

Take the query "independence day" as an example. According to Google researchers, its intent changes dramatically in early July, leaning toward the U.S. holiday. However, during movie release periods, it often refers to the blockbuster franchise. Another case comes from Microsoft Research, which found that the query "earthquake" had vastly different user expectations before and after the Haitian earthquake. As Stewart Whiting from the University of Glasgow explains:

"Uncertainty about user expectations is a perennial problem for IR systems, further confounded by changes over time."

This variability, often called the "chronotype" of a query, highlights how meanings and associated terms evolve over time. Ignoring these temporal patterns can significantly reduce search precision and relevance. The challenge becomes even more complex within a single session, where user intent can shift dynamically based on their interactions. For instance, studies show that 37% to 80% of query-result pairs experience notable changes in click dwell times depending on whether the result was the first clicked item in a session. Addressing these dynamic shifts is just as critical as tackling the issue of temporal data leakage.

Temporal Data Leakage and Overfitting

Temporal data leakage occurs when future information unintentionally slips into training datasets, leading models to memorize patterns rather than generalize effectively. This often happens due to overlapping documents and queries in datasets that lack clear, unique identifiers.

For example, systems with high static scores but significant Relative nDCG Drop (RnD) often show signs of overfitting. As noted by LongEval at CLEF 2025:

"Systems that are more robust to the evolution of the test collection were not the top-performing ones."

Short-term behavioral data, while useful for capturing recent trends, is particularly noisy and sparse, which increases the risk of overfitting. Research from Walmart Global Tech revealed that short-term features (e.g., using a 1-month data window) are effective for identifying trends and new products but are also more prone to noise compared to long-term features (e.g., 2-year data windows). To address this, practitioners often use Beta-Binomial Bayesian models to smooth features like click and order rates, reducing the impact of short-term volatility.

Evaluating models across various temporal lags - the time gaps between training and testing datasets - can also help detect when performance starts to degrade as test data diverges from training conditions. This approach ensures that models remain robust even as user behavior evolves over time.

sbb-itb-f7d34da

Effective Methods for Capturing Short-Term User Interests

In the fast-paced world of user interactions, understanding and responding to short-term interests is critical. Here are some practical approaches that help tackle these shifting dynamics.

Session-Based Dynamic Profiling

Session-level modeling keeps track of user behavior in real time, tailoring results based on immediate actions. For instance, Session-Based k-Nearest Neighbors (SKNN) compares the current session to past ones using cosine similarity on binary item vectors. Meanwhile, User Stateful Embedding (USE) takes this a step further by storing previous model states, enabling real-time updates without reprocessing all historical data. Researchers Malte Ludewig and Dietmar Jannach highlight the effectiveness of straightforward methods:

"Conceptually simple methods often lead to predictions that are similarly accurate or even better than those of today's most recent techniques based on deep learning models."

In March 2024, USE was tested on Snapchat user behavior logs across eight tasks in both static and dynamic settings. By periodically updating user embeddings, it outperformed traditional stateless models in both efficiency and accuracy. As Zhihan Zhou, the lead author, notes:

"User embeddings should be periodically updated to account for users' recent and long-term behavior patterns."

Similarly, Pinterest introduced TransAct in May 2023. This Transformer-based model combines real-time sequential modeling with batch-generated long-term embeddings, striking a balance between responsiveness and cost-effectiveness.

These session-based methods pave the way for sequential prediction tasks, which offer another effective way to anticipate user actions.

Using Sequential Prediction Tasks

Sequential prediction tasks analyze users' chronological interaction history to predict their next steps. One common approach, Next-Item Prediction, treats each action as part of a sequence, forecasting what comes next. By using techniques like data augmentation and stabilizing input distributions, Recurrent Neural Networks (RNNs) have achieved notable improvements in metrics like Recall@20 and MRR@20 for session-based recommendations.

Some systems go beyond simple next-item predictions. For instance, generative tasks can forecast future queries, clicked documents, or related searches within a session. In February 2025, Meituan implemented the Next-Session Prediction Paradigm (NSPP) through its SessionRec framework. This approach predicted multiple items users might engage with in future sessions rather than focusing solely on the next interaction.

Another trend in sequential prediction is future W-behavior prediction, which aims to predict a broader range of upcoming user actions. By capturing a wider behavioral horizon, this method enhances the quality of user embeddings and provides a more robust understanding of user interests.

While sequential prediction focuses on forecasting actions, contrastive self-supervision hones in on refining user intent by analyzing behavior patterns.

Contrastive Self-Supervision for Short-Term Representation

Short-term user behavior is often inconsistent - users may perform different actions or queries that reflect the same intent. Contrastive learning tackles this challenge by training models to identify similar behavior sequences as representing the same intent, even when specific actions differ. Yutao Zhu and colleagues explain:

"A user behavior sequence has often been viewed as a definite and exact signal reflecting a user's behavior. In reality, it is highly variable: user's queries for the same intent can vary, and different documents can be clicked."

This approach trains models to compare augmented sequences against unrelated ones, forcing them to focus on the underlying intent rather than surface-level patterns. Tests on two real-world query log datasets showed that contrastive learning outperformed leading context-aware document ranking models.

The USE framework further integrates contrastive learning with a Same User Prediction (SUP) objective, which determines whether two behavior sequence segments belong to the same user. When tested on Snapchat behavioral data across eight tasks, USE consistently outshined established baselines, proving that contrastive self-supervision is a powerful tool for capturing short-term user representations in dynamic settings.

Improving Model Performance in Short-Term Scenarios

Once you've captured short-term user interests, the challenge becomes keeping your model accurate as user behavior shifts over time. Here, we'll look at strategies to maintain relevance and ensure your model stays effective in real-world applications.

Reducing Temporal Drift in User Data

User behavior is always changing, so it's crucial to test models in ways that reflect these shifts. One approach is using temporal splits - evaluating models on data collected months after training to simulate real-world conditions.

In 2024, the LongEval Lab at the CLEF conference ran 73 retrieval experiments using datasets called "Lag6" and "Lag8", where test data was 6 and 8 months newer than the training data. Their findings were eye-opening: systems that performed best on standard nDCG scores didn’t always hold up over time.

To measure this drop-off, they introduced the Relative nDCG Drop (RnD) metric. This metric highlights how much performance declines as test data ages. Interestingly, while nDCG rankings stayed consistent across time periods, RnD rankings didn’t. This means a model that’s great today might degrade more quickly than a slightly less effective but more stable alternative.

Another tactic is using hybrid lookback windows. For example, in December 2023, Walmart Global Tech launched a vertical-aware ranking model combining 2-year and 1-month behavioral data. For a query like "new year's eve", the model prioritized 2024 products over outdated 2023 ones. This adjustment moved a 2024 glasses item from position 46 to 13, boosting Marketplace GMV by 0.64% and increasing Add-to-Cart rates for the top 10 results by 0.21%.

Balancing Short-Term and Long-Term Interests

Reducing drift is one thing, but balancing short-term and long-term data is equally important. Different product categories demand different approaches - electronics and fashion trends change quickly, while grocery purchases tend to stay consistent. The key is teaching your model when to lean on recent trends versus historical patterns. This process often involves finding academic sources to validate behavioral theories and model architectures.

Walmart's research offers a great example. A model using only 1-month behavioral data showed a 3.79% lift in the Electronics, Toys, and Seasonal categories but suffered a 0.63% decline in the Food category compared to a 2-year baseline. To address this, Walmart implemented vertical-aware gating, which adjusts the weight of 1-month and 2-year data based on query-level signals. This approach improved Marketplace GMV by 0.64%, increased Add-to-Cart sessions by 0.22%, and reduced session abandonment by 0.16%.

"Incorporating vertical features can indeed enhance the integration of multi-window behavioral features, allowing each to play to its strengths and mitigate its weaknesses." - Qi Liu et al., Walmart Global Tech

| Feature Type | Best For | Strengths | Weaknesses |

|---|---|---|---|

| Long-Term (2 years) | Food, Consumables | Rich historical data, noise-resistant | Misses recent trends, slow to adapt |

| Short-Term (1 month) | Fashion, Electronics | Captures trends, new product friendly | Sparse data, noise-prone |

| Hybrid + Vertical | General Merchandise | Context-aware, balanced | Requires query classification |

This balance is critical for short-term personalization, which lies at the heart of session-based search modeling.

Measuring Generalization with Performance Metrics

To track improvements, you need metrics that account for both immediate and long-term performance. While nDCG measures performance at a single point in time, RnD reveals how performance declines as data ages.

The LongEval study, which used a dataset of 30 million documents and 15,000 queries, found that the highest raw nDCG scores didn’t always translate to consistent performance over 6-to-8-month periods. Often, a model with slower degradation is more useful than one with a high initial peak but rapid decline.

Some researchers also suggest using un-normalized DCG in certain cases. According to Olivier Jeunen, normalization in nDCG can sometimes flip the ranking of competing methods, making DCG a more reliable measure of online rewards.

To ensure your models generalize effectively, test them on data collected at multiple time intervals after training - not just a single snapshot. This approach helps you monitor both immediate performance and its evolution over time, ensuring your model stays responsive to changing user behavior while delivering consistent results in short-term search scenarios.

Tools and Resources for Research and Development

Research tools have become essential for advancing model development, especially when dealing with complex methods and metrics. Staying updated with the latest research is critical for building effective short-term search models. For instance, the LongEval 2025 benchmark was recently introduced to assess model performance over time. Similarly, frameworks like Re3 tackle issues such as temporal drift and data leakage. These tools and benchmarks help differentiate models that degrade quickly from those that maintain their effectiveness, highlighting the importance of streamlined academic research tools.

Using Sourcely for Academic Research

Sourcely (https://sourcely.net) is a powerful resource for researchers looking to access the latest literature on topics like temporal information retrieval and session-based modeling. Its precise publication date filters are invaluable for finding up-to-date studies on staleness resistance and query-aware gating mechanisms, especially for research conducted post-2024. For example, Sourcely can help locate benchmarks like Re2Bench, which researchers at the University of Science and Technology of China used in September 2025 to achieve a 0.920 R@1 score on temporal relevance tasks.

The platform provides access to millions of sources, making it easier to explore studies on topics like lookback time windows and temporal alignment. It also supports research into advanced model updating techniques, such as MURR (Model Updating with Regularized Replay), which helps prevent catastrophic forgetting. With its filtering options, Sourcely allows users to compare approaches like session-based dynamic profiling versus long-term historical behavior, offering a comprehensive perspective on balancing short-term and long-term modeling interests.

Another standout feature is Sourcely's citation export tool, which simplifies the process of organizing complex bibliographies for longitudinal studies. This can save researchers hours when compiling references for studies on temporal generalizability or evaluating frameworks that account for "lags." Sourcely offers a free version with basic features, while paid plans start at $17 per month, unlocking advanced capabilities like essay uploads and more refined search filters. By providing access to current academic literature, Sourcely helps researchers refine their methods and address challenges such as temporal drift and data leakage effectively.

Conclusion: Main Points for Short-Term Search Behavior Modeling

Modeling short-term search behavior is all about navigating the challenge of rapidly shifting user intent. This is especially tricky for searches focused on current events or breaking news, as traditional ranking systems often struggle to keep up with these changes. Another layer of complexity comes from the false assumption that user actions are independent of context. Research highlights that between 37% and 80% of query–result pairs show noticeable differences in click dwell times depending on whether the result was the first clicked item in a session. These findings emphasize the importance of adopting flexible strategies that can adjust to evolving user needs.

The best results come from a hybrid approach that blends session-based dynamic profiling with long-term historical data. By combining the strengths of both, this method outperforms relying on either one alone. However, achieving this balance requires fine-tuning the weight assigned to context for each query.

To stand out, successful models need to focus on real-time adaptation and rigorous evaluation. While inconsistent research frameworks and varying assumptions across the field make it hard to establish universal benchmarks, tools like Sourcely offer valuable resources for researchers working on temporal information retrieval and session-based modeling.

Building strong models of short-term intent requires integrating multiple data sources - queries, search result clicks, and general web page visits. Context-aware timing models should take priority over basic metrics, but it’s also worth noting that not every search task benefits from complex sequence modeling. In some cases, simpler features can perform just as well. Ultimately, the key lies in creating a balanced, adaptive system that uses both immediate session data and historical trends to deliver personalized and effective search results.

FAQs

What is considered a 'search session' in short-term modeling?

A 'search session' in short-term modeling describes a sequence of user activities involving a search engine. These activities can include submitting multiple queries, refining those queries, and interacting with the displayed results. All of this happens within a single uninterrupted period, with the goal of addressing a specific need for information.

How can teams prevent temporal data leakage in search logs?

Teams can avoid temporal data leakage in search logs by using frozen, time-stamped web snapshots rather than depending entirely on date filters. Date filters can often fall short because of issues like updated articles, dynamic related-content modules, or inconsistent metadata. By introducing stricter retrieval safeguards, teams can achieve more reliable and secure modeling of short-term search behavior.

When should a model favor recent behavior over long-term history?

When dealing with short-term, shifting user needs or preferences, a model should focus on recent behavior. Why? Because recent actions are often a direct window into the user’s current context and intent. They provide clues about what the user is looking for right now, which is typically more relevant than older patterns when trying to meet immediate demands.