Over-Reliance on AI: Problems and Solutions

AI tools are transforming research, but over-reliance can create serious problems. Here's what you need to know:

-

Main Issues:

- AI "hallucinations" generate false or biased outputs, leading to errors.

- Heavy dependence on AI reduces critical thinking and essential research skills.

- Ethical concerns arise, such as AI-driven academic fraud and loss of transparency.

-

Why It Matters:

- Flawed AI outputs can damage research credibility (e.g., COVID-19 diagnostic studies where only 15% met quality standards).

- Blind trust in AI risks producing more data but less understanding.

-

Solutions:

- Verify AI outputs manually and cross-check sources.

- Use AI for minor tasks like formatting but retain control over critical decisions.

- Disclose AI usage to maintain transparency.

- Regularly practice non-AI research methods to sharpen skills.

AI should assist, not replace, your judgment. Thoughtful integration ensures better research outcomes while preserving academic integrity.

How Smart Academics Use AI (Without Breaking the Rules)

Main Problems of Over-Relying on AI in Research

Relying too heavily on AI in research can compromise accuracy, hinder skill development, and erode ethical standards.

Accuracy Problems and Bias

AI tools can sometimes generate outputs that appear credible but are entirely fabricated - commonly referred to as "hallucinations." A striking example occurred in May 2023 during the case of Mata v. Avianca. Lawyers submitted a court brief containing six non-existent case citations, all created by an AI model. Their failure to verify the information led to severe consequences.

Another issue is the quality of data used by AI systems. A systematic review of AI diagnostics for COVID-19 revealed significant problems, including data leakage and poor-quality datasets. These flaws can lead to unreliable conclusions.

AI systems can also reinforce pre-existing biases by tailoring responses to align with user expectations. As researcher Csernatoni cautioned:

"Overreliance on AI-generated content risks creating an 'echo chamber' that stifles novel ideas and undermines the diversity of thought".

Such accuracy issues not only distort research findings but also weaken the critical thinking required for meaningful scientific progress.

Loss of Critical Thinking and Research Skills

When AI-generated outputs are taken at face value, researchers risk losing essential analytical skills. Studies have shown that frequent AI use correlates with diminished critical thinking. For instance, a 2025 study involving 666 participants found that those who relied heavily on AI demonstrated lower critical thinking abilities. Interestingly, the more confident participants were in AI, the less critical they became.

This reliance can lead to what some experts call "cognitive debt." Researchers bypass the intellectual effort of forming hypotheses, analyzing data, and drawing conclusions, which are all crucial elements of scientific inquiry. As Christopher Dede, Senior Research Fellow at Harvard Graduate School of Education, aptly put it:

"If AI is doing your thinking for you... that is undercutting your critical thinking and your creativity".

Academic Integrity Issues

Overusing AI doesn't just raise technical concerns - it also poses ethical challenges. Generative AI is increasingly being exploited by so-called "papermills" to churn out fraudulent or low-quality academic papers, which undermines the credibility of peer-reviewed research. Even AI detectors, designed to identify such misuse, have shown high rates of false negatives, especially when paraphrasing tools are employed.

Another issue is the illusion of understanding. Researchers may believe they fully grasp a concept simply because an AI model provides accurate predictions. However, since many AI models operate as "black boxes", they offer no real insight into how or why they reach their conclusions. As a Nature analysis pointed out, this trend risks creating a research environment "in which we produce more but understand less".

Finally, the widespread reliance on the same AI tools across entire disciplines can lead to algorithmic monocultures. This means that similar methods and viewpoints dominate, potentially amplifying errors and limiting the diversity of ideas within the scientific community.

How to Use AI Responsibly in Research

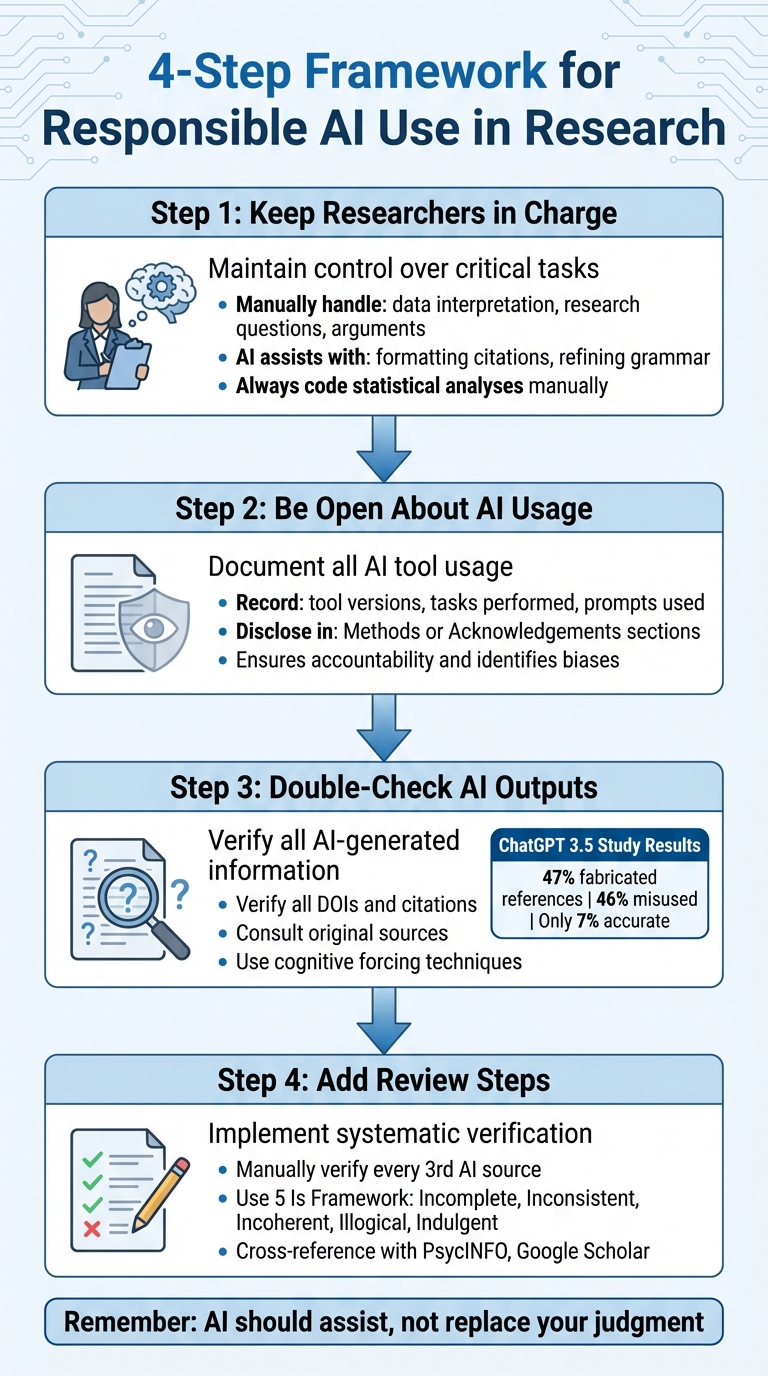

4-Step Framework for Responsible AI Use in Research

Using AI responsibly in research means treating these tools as helpers, not as replacements. Researchers must stay fully accountable for their work - AI systems cannot be credited as authors, inventors, or copyright holders. As Rose Sokol, PhD, Publisher of APA Journals and Books, explains:

"To be an author you must be a human. The threat for students and researchers is really the same - over-relying on the technology."

These principles ensure that researchers maintain control over every critical decision in their work.

Keep Researchers in Charge of Key Tasks

AI should only assist, not take over, essential academic responsibilities. Researchers need to manually handle tasks like interpreting data, crafting research questions, and building arguments. AI can help with less critical tasks such as formatting citations or refining grammar, but activities like coding for statistical analyses should always be done manually to fully grasp the results. By automating minor tasks, researchers can focus more on the creative and analytical aspects that drive impactful research.

Be Open About AI Usage

Transparency is non-negotiable when using AI in research. Keep a record of all AI tools used, including their versions, tasks performed, and prompts provided. Many academic journals now require researchers to disclose AI usage in sections like Methods or Acknowledgements. This practice not only ensures accountability but also helps identify and address potential biases stemming from the AI's training data.

Double-Check AI Outputs

Never accept AI-generated outputs at face value. A study on ChatGPT 3.5 revealed that 47% of its references were fabricated, 46% were misused, and only 7% were entirely accurate. This highlights the importance of verifying all AI-generated information. Even advanced systems like AlphaFold, which predicts protein structures with a 92.4% accuracy rate, still rely on researchers to test and refine their predictions. Always verify DOIs and consult the original sources to confirm accuracy and context. Incorporating deliberate review steps - a method known as cognitive forcing - can help avoid blindly accepting AI outputs.

sbb-itb-f7d34da

Practical Ways to Avoid Over-Reliance on AI

Building on the challenges we’ve already explored, there are actionable steps you can take to limit dependence on AI while maintaining the integrity of your research. These measures ensure that AI remains a helpful tool rather than a crutch, allowing you to stay sharp and ensure your work is credible.

Add Review Steps to Your Research Process

Slowing down your workflow intentionally can help prevent blindly accepting AI-generated outputs. For example, you can manually verify every third AI-suggested source using reliable scholarly databases like PsycINFO or Google Scholar. Applying the 5 Is Framework - which flags outputs as Incomplete, Inconsistent, Incoherent, Illogical, or Indulgent - is another effective method. This is crucial because studies have shown that 38% of AI-generated citations had incorrect or fabricated DOIs, and 16% referenced articles that didn’t even exist. Cross-referencing all citations with trusted databases can help eliminate these so-called "hallucinated" sources.

Build Your Critical Evaluation Skills

Instead of taking AI outputs at face value, develop a systematic approach to assess their accuracy. For instance, when AI provides a claim, look for independent verification through reliable sources such as government websites, peer-reviewed journals, or institutional databases. Comparing AI-generated summaries with the original abstracts can also help identify overgeneralizations or misrepresentations. Another useful strategy is triangulation - running the same query across multiple AI tools and traditional scholarly resources to spot inconsistencies or overlooked perspectives. As the Cal State LA University Library puts it:

"AI is a starting point, not an end point. Always verify and critically assess before including AI-supported content in your literature review."

These practices naturally reinforce your ability to evaluate sources manually.

Practice Research Tasks Without AI

Engaging in manual research is key to preserving foundational academic skills. For example, conducting searches directly in library databases - without relying on AI - can help you uncover critical articles that AI might miss due to paywalls or publication recency. Reading full-text articles instead of relying solely on AI summaries ensures you capture the full context and nuances of the research. Similarly, for computational tasks, writing your own code for statistical analyses rather than using AI-generated scripts can deepen your understanding of the methodology. David Tompkins, a PhD student at Cornell University, emphasizes this point:

"I want to make sure that whatever I'm reporting is something that I fully understand and can stand behind when I submit a paper."

Using Sourcely to Support, Not Replace, Your Research

Sourcely is built to assist researchers, not to take over their role entirely. When used thoughtfully, it can make the research process smoother while leaving the critical decision-making in your hands. As Elman Mansimov from Sourcely explains, "Think of it as your own personal research assistant". This approach combines efficient source discovery with the rigorous review practices that remain essential for quality research.

Finding Sources with Sourcely

Sourcely simplifies the process of locating credible academic sources by analyzing your research questions or essay prompts. Instead of just typing in keywords, you can paste a full research question or even sections of your essay (up to 300 characters for free users; unlimited with the $17/month SourcelyPRO plan). The AI then generates concise summaries for each source, helping you decide which ones are worth exploring further. This feature addresses a common challenge faced by students: a 2023 study found 24 instances where students used AI to "Understand Complex Topics" and 11 instances to "Find Evidence or Examples".

Verifying Sources with Sourcely's Features

Sourcely also offers tools to help you verify and refine your sources efficiently. Its advanced filters allow you to narrow results by publication date, author, or specific keywords, ensuring your sources meet the required standards. For instance, filtering by publication date ensures you're referencing the most up-to-date research - critical in fast-evolving fields. A systematic review of 415 AI-based COVID-19 diagnostic studies found that only 62 met basic quality standards, underscoring the importance of careful source verification.

A student from the University of California, Irvine shared this insight:

"It is still crucial to exercise critical thinking and verify information from primary sources when necessary".

Sourcely's filtering tools align with these systematic verification practices, giving you the ability to refine and validate your search results with precision.

Once you've verified your sources, you can seamlessly incorporate them into your work using Sourcely's structured suggestions.

Supporting Your Arguments with Sourcely

Sourcely's "where to insert sources" feature is particularly useful. It suggests specific spots in your paper where evidence can strengthen your arguments, while leaving you in charge of the overall direction. This distinction is key, as the Swem Library at William & Mary emphasizes:

"Whatever tools you use, whether AI-enabled or not, should only enhance your analysis while affirming your intellectual control".

Additionally, Sourcely automates citations in APA, MLA, and Chicago formats, helping you avoid formatting errors and focus on your analysis. The platform uses Retrieval Augmented Generation to base its recommendations on existing research, minimizing - but not entirely eliminating - the risk of inaccuracies common with general AI tools. This is why verifying AI-generated outputs remains crucial; even the best tools require your judgment to ensure accuracy in your final work.

Conclusion: Balancing AI Use with Academic Integrity

Between 2012 and 2022, the use of AI in academic research increased fourfold. This shift calls for thoughtful application: embracing accurate AI outputs while rejecting flawed ones.

AI should serve as a tool - a way to enhance your work, not replace your judgment. For instance, platforms like Sourcely can help organize references, find relevant sources, and suggest citations to strengthen your arguments. But here's the catch: every source must be verified, and your analysis should reflect genuine understanding. As Alessandra Giugliano from Thesify aptly states:

"AI tools should be used strictly for generating initial ideas or refining research directions - not for conducting the core critical analysis".

The risks of trusting AI blindly are real. History offers cautionary tales. During the COVID-19 pandemic, a systematic review of 415 AI diagnostic studies revealed that only 62 met basic quality standards. Why? Researchers treated AI as flawless, bypassing the necessary human oversight. The lesson here is clear: unchecked AI outputs can lead to serious errors.

Maintaining academic integrity starts with understanding AI's limitations and being transparent about its role in your work. Add review steps to your process, and make time for research tasks that don’t rely on AI. This helps sharpen your critical thinking skills. Always cross-check AI-generated outputs with primary sources. As Microsoft researchers warn:

"Overreliance on AI occurs when users start accepting incorrect AI outputs".

Ultimately, you are the final checkpoint. Your oversight ensures that AI remains a supporting tool, not a shortcut that undermines your research integrity.

The key is striking the right balance. Responsible AI integration protects both the quality of your work and the principles of academic scholarship. It’s not about avoiding AI altogether - it’s about using it thoughtfully, keeping your judgment and analytical skills at the forefront. That balance is what upholds the integrity of your research and the academic community as a whole.

FAQs

How can researchers verify the accuracy of AI-generated content?

To ensure the accuracy of AI-generated content, researchers should treat AI as a supporting tool rather than a substitute for critical thinking. This starts with crafting precise and well-thought-out prompts, followed by cross-checking AI outputs against trustworthy, peer-reviewed sources. It's essential to verify claims by examining the original research to ensure the data and conclusions align with the AI's interpretation.

Transparency plays a crucial role in this process. Researchers should document key details such as the prompt used, the AI model version, and the generation date. Additionally, double-checking results for consistency is a must. Steps like expert reviews or conducting statistical re-analysis can provide an added layer of reliability.

Tools like Sourcely can streamline this process. Sourcely allows researchers to quickly find credible, peer-reviewed sources, making it easier to validate AI-generated content while saving time. By combining thoughtful prompt creation, thorough verification, and dependable sourcing tools, researchers can minimize the risks of over-relying on AI.

How can I maintain critical thinking while using AI tools?

To keep your critical thinking sharp, think of AI as a partner rather than a substitute for your judgment. Always double-check AI-generated content by comparing it with trusted sources. Tools like Sourcely are great for accessing and reviewing original research papers to ensure the information is accurate and relevant.

Build a mindful process by questioning AI’s suggestions, identifying any hidden assumptions, and adding your own analysis. Take regular moments to ask yourself: Does this match what I know? Could there be other explanations? These pauses encourage active engagement and help you avoid becoming overly dependent on AI. By blending AI’s speed with thorough fact-checking, you can boost both your productivity and your ability to think critically.

Why is it important to disclose the use of AI in academic research?

Disclosing the use of AI in academic research plays a key role in preserving accountability, transparency, and trust within the scientific community. When researchers fail to clarify how AI was involved, it becomes harder for readers and reviewers to pinpoint its influence, which can lead to questions about potential bias or gaps in reasoning. This absence of transparency can weaken the study’s credibility.

Clear reporting also allows the scientific community to properly replicate and evaluate findings. By detailing which AI tools were used, how they were implemented, and which parts of the work involved AI assistance, researchers enable thorough peer review and reduce the risk of hidden biases skewing the conclusions.

Tools like Sourcely simplify this process by documenting AI-generated suggestions and linking sources directly to specific claims. This not only helps researchers meet disclosure standards but also ensures a more reliable and traceable research workflow, combining the benefits of AI with a commitment to openness.